Abstract

As artificial intelligence and machine learning systems are being integrated in almost all facets of society, the need to understand such models is becoming paramount. Despite the phenomenal success of deep learning in many application domains, the explainability of such models has proved elusive. Recent work in applying physics inspired models and techniques to understand machine learning models and AI has yielded promising results [Lin 2017]. Machine learning has a long history of cross-fertilization with other domains, e.g., Shapley Values models from game theory [Molnar 2019], generalized linear models from statistics, Bayesian rule lists from frequent pattern mining [Wang 2016] and functional analysis in social networks. Ideas from Physics have time and again provided fodder for conceptual developments in machine learning. In this tutorial we provide an overview of how ideas from physics have informed progress in machine learning? We also explore the history of influence of physics in machine learning that is oft neglected in the Computer Science community, and how recent insights from physics hold the promise of opening the black box of deep learning. The history of borrowing ideas from physics to machine learning has shown that mapping machine learning problem formulation to the class of physical models with already known behavior and solution can be used to explain the inner workings of the machine learning models [Carleo 2019]. Although this tutorial focuses on physics and machine learning, we do not assume any advanced knowledge of physics for the audience. Lastly, we will describe the current and future trends in this area and our suggestions on a research agenda on how physics-inspired models can benefit machine learning. The salient highlights of the topics covered are as follows:

Foundations:

Machine Learning has a long history of interaction and influence from Statistical Physics going back to the 1980s. Statistical physics is a branch of physics that mainly employs methods of probability theory and statistics, especially for dealing with large populations and approximations, in solving physical problems. We will start with a very high-level brief overview of statistical physics and some well-known early examples of using physics to inform machine learning models e.g., Valiant’s theory of the Learnable [Valiant 1984] and Hopfield’s neural network model of associative memory [Hopfield 1982]. These involved application of concepts from spin glass theory to neural networks models. We will describe how analytic statistical physics models have also been used to demonstrate that the learning dynamics of some machine learning models can be better explored by using methods from physics as compared to using analysis free PAC bounds [Valiant 1984].

Information Bottleneck & Deep Learning:

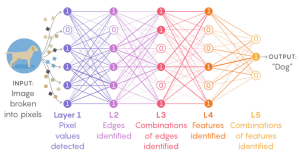

The concept of Information bottleneck [Tishby 2000] has its origins in statistical physics and has been greatly influential in understanding of deep learning theory. The theory of the information bottleneck for deep learning [Shwartz-Ziv 2017, Tishby 2015] aims to quantify the notion that layers in a neural networks are trading off between keeping enough information about the input so that the output labels can be predicted, while forgetting as much of the unnecessary information as possible in order to keep the learned representation concise. One of the interesting consequences of this information theoretic analysis is that the traditional capacity, or expressivity dimension of the network, such as the VC dimension, is replaced by the exponent of the mutual information between the input and the compressed hidden layer representation. This implies that every bit of representation compression is equivalent to doubling the training data in its impact on the generalization error.

The concept of Information bottleneck [Tishby 2000] has its origins in statistical physics and has been greatly influential in understanding of deep learning theory. The theory of the information bottleneck for deep learning [Shwartz-Ziv 2017, Tishby 2015] aims to quantify the notion that layers in a neural networks are trading off between keeping enough information about the input so that the output labels can be predicted, while forgetting as much of the unnecessary information as possible in order to keep the learned representation concise. One of the interesting consequences of this information theoretic analysis is that the traditional capacity, or expressivity dimension of the network, such as the VC dimension, is replaced by the exponent of the mutual information between the input and the compressed hidden layer representation. This implies that every bit of representation compression is equivalent to doubling the training data in its impact on the generalization error.

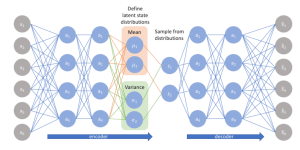

Auto-Encoders:

In deep learning, auto-encoders have been applied to a variety of applications with phenomenal success [Doersch 2016]. We will describe how variational autoencoders (VAE) [Kingma 2013] are variants of auto-encoders that have a direct analogue to physical model where the autoencoder can be said to be represented via a graphical model. It should be noted that VAEs with a single hidden layer are closely related to widely used techniques in signal processing in physics such as dictionary learning and sparse coding [Kabashima 2016].

In deep learning, auto-encoders have been applied to a variety of applications with phenomenal success [Doersch 2016]. We will describe how variational autoencoders (VAE) [Kingma 2013] are variants of auto-encoders that have a direct analogue to physical model where the autoencoder can be said to be represented via a graphical model. It should be noted that VAEs with a single hidden layer are closely related to widely used techniques in signal processing in physics such as dictionary learning and sparse coding [Kabashima 2016].

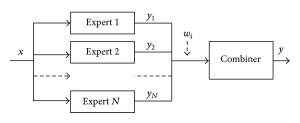

Committee Machine:

Committee machine is a special type of fully-connected neural network which only learns the weights of the first layer and the weights of the subsequent layers are fixed. These models have been extensively studied in physics [Engel 2001]. We will discuss some interesting results from committee machines which may offer avenues for generalization in machine learning: It’s been observed that when the size of the input data (number of rows) is small, the optimal error is achieved by a weight-configuration that is the same for every hidden unit. This is equivalent to implementing simple regression. However, when the number of hidden nodes exceeds a threshold, the units in the hidden learn different weights. Consequently, generalization error decreases. Also, as the number of hidden nodes grow, it can be shown that generalization is achievable information-theoretically but not tractably [Aubin 2018]. Lastly, we discuss how Committee machine was also used to analyze the consequences of over-parametrization in neural networks in [Goldt 2019].

Committee machine is a special type of fully-connected neural network which only learns the weights of the first layer and the weights of the subsequent layers are fixed. These models have been extensively studied in physics [Engel 2001]. We will discuss some interesting results from committee machines which may offer avenues for generalization in machine learning: It’s been observed that when the size of the input data (number of rows) is small, the optimal error is achieved by a weight-configuration that is the same for every hidden unit. This is equivalent to implementing simple regression. However, when the number of hidden nodes exceeds a threshold, the units in the hidden learn different weights. Consequently, generalization error decreases. Also, as the number of hidden nodes grow, it can be shown that generalization is achievable information-theoretically but not tractably [Aubin 2018]. Lastly, we discuss how Committee machine was also used to analyze the consequences of over-parametrization in neural networks in [Goldt 2019].

Physics and Deep Learning in General:

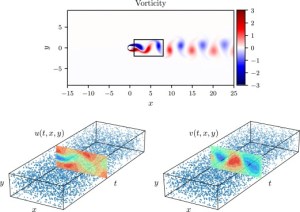

Physics-inspired models of GANs is an emerging area of research, e.g. the work on a solvable model of GANs [Wang 2018] is actually a generalization of the earlier statistical physics works on online learning in Perceptrons. We will discuss multiple synergies between physics and deep learning e.g., the training phase of many machine learning algorithms is done via stochastic gradient descent which has direct analogies in the study of complex energy landscapes and their relation to dynamical behaviors in physics [Li 2016]. In neural nets with many layers, early layers are said to be learning to represent the input data at a finer scale than the later layers. In physics, this can be mapped to renormalization groups that are used to extract macroscopic behavior from microscopic rules [Bény, 2013; Mehta and Schwab, 2014].

Physics-inspired models of GANs is an emerging area of research, e.g. the work on a solvable model of GANs [Wang 2018] is actually a generalization of the earlier statistical physics works on online learning in Perceptrons. We will discuss multiple synergies between physics and deep learning e.g., the training phase of many machine learning algorithms is done via stochastic gradient descent which has direct analogies in the study of complex energy landscapes and their relation to dynamical behaviors in physics [Li 2016]. In neural nets with many layers, early layers are said to be learning to represent the input data at a finer scale than the later layers. In physics, this can be mapped to renormalization groups that are used to extract macroscopic behavior from microscopic rules [Bény, 2013; Mehta and Schwab, 2014].

Stochastic Block Models:

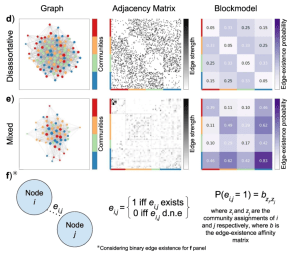

The stochastic block models are a class of generative models for random graphs. Many techniques that are used for phenotyping and clustering employ stochastic block models and have widespread applicability in machine learning. In unsupervised learning, work on stochastic block model for detection of clusters/communities in sparse networks was built off of work from physics on low-rank matrix decomposition. The problem of community detection has been studied extensively in by the physics community [Fortunato, 2010, Eaton 2012]. It is important to note that the exact solution and understanding of algorithmic limitations in the stochastic block model came from the spin glass theory in Physics [Decelle 2011]. Additionally, a conjecture about belief propagation algorithm that came from physics was the foundation for the discovery of a new class of spectral algorithms for sparse data [Krzakala 2013b].

The stochastic block models are a class of generative models for random graphs. Many techniques that are used for phenotyping and clustering employ stochastic block models and have widespread applicability in machine learning. In unsupervised learning, work on stochastic block model for detection of clusters/communities in sparse networks was built off of work from physics on low-rank matrix decomposition. The problem of community detection has been studied extensively in by the physics community [Fortunato, 2010, Eaton 2012]. It is important to note that the exact solution and understanding of algorithmic limitations in the stochastic block model came from the spin glass theory in Physics [Decelle 2011]. Additionally, a conjecture about belief propagation algorithm that came from physics was the foundation for the discovery of a new class of spectral algorithms for sparse data [Krzakala 2013b].

Boltzmann Machines:

Restricted Boltzmann Machines (RBM) are algorithms that are used for many applications within unsupervised learning. Even the name itself suggests that these models areare directly inspired from physics. In fact, the Boltzmann machine is often called the inverse Ising model in the physics literature [Nguyen 2017]. We will describe how the idea of Restricted Boltzmann Machines came about and how these models represent data and recent advances in physics around where they are used to model protein families from their sequence information [Tubiana 2018]. More recent work in Deep Learning and RBMs will also be discussed.

Restricted Boltzmann Machines (RBM) are algorithms that are used for many applications within unsupervised learning. Even the name itself suggests that these models areare directly inspired from physics. In fact, the Boltzmann machine is often called the inverse Ising model in the physics literature [Nguyen 2017]. We will describe how the idea of Restricted Boltzmann Machines came about and how these models represent data and recent advances in physics around where they are used to model protein families from their sequence information [Tubiana 2018]. More recent work in Deep Learning and RBMs will also be discussed.

Symbolic regression:

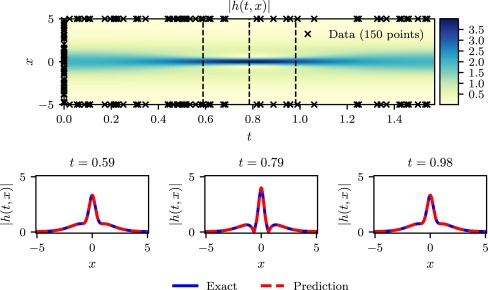

Symbolic regression is an area of AI which that focuses on a symbolic expression that accurately matches a given data set. This area has seen cross fertilization from physics. A pioneering work in this area is by Schmidt et al [Schmidt 2009] who described Discovering laws from data. More recent work by Udrescu and Tegmark [Udrescu 2019]

Weaving it all together:

We will end the tutorial with the current limitations of physics-based models as applied to gaining insights on AI and machine learning models e.g., one prominent issue is that many physics-based models employ simplified models of the world which do not generalize. While this implies that the models from physics are solvable and can be computed in closed forms, it contrasts with the aims of traditional learning theory which generally focuses on worst cases error bounds [Carleo 2019]. Another complimentary approach that is fast emerging in this area is to use machine learning to find approximate solutions to problems in physics and then using the approaches in physics to help advance the field in machine learning []. We will also describe how physics-based models can help further the cause of explainable AI in general and what the future may hold for this area. Lastly, we extrapolate from current trends and propose how a research programme for physics inspired machine learning would look like and what are the likely future trends in this field.

Audience

This tutorial would be of interest to anyone who is interested in the applications of machine learning and artificial intelligence models to the healthcare domain. The tutorial assumes some familiarity with the foundational concepts of machine learning but does not presuppose domain knowledge in physics.

Tutorial Outline

- Overview of Physics and AI

- How Physics inspired models can power explainable AI?

- Foundational Issues

- What constitutes Physics based models?

- Statistical Mechanics and Machine Learning

- Early work on Understanding Neural Nets with Statistical Mechanics

- Phase Transitions and Generalization in Machine Learning

- Learning Theory, Information Theory and Helmholtz Energy Equations

- Physics Based Models and Unsupervised Models

- Phenotyping and Explainability

- Spin-Glass Models for Clustering

- Hyperbolic Embeddings

- Spectral Algorithms

- Restricted Boltzmann Machines [RBM]

- Physics Based Models and Deep Learning

- Information Bottlenecks

- Spin Glass Models and Neural Networks

- Auto-Encoders and Signal Processing

- GANs as Physics Models

- Committee Machines

- Learning in Deep Networks and Renormalization Groups

- Generalization in Neural Networks

- Recent Interesting Directions

- Stochastic gradient descent [SGD] and Glassy Models

- Spectral Inference Networks

- Non-Equilibrium Physics and Generative Models

- Symbolic Regression

- Uses and limits of Symbolic Regression

- Physics and Data Driven Knowledge Distillation

- AI-Feynman

- Physics and the Limitations of Interpretability and Explanation in AI

- Current Limitations of Physics Based Models

- Solvability and Complexity

- Generalizability

- Physics based models for alternatives to Explainable AI

- Theoretical Guarantees

- Empirical Performance Guarantees

- Conclusion

- Summary

- Physics inspired models and the Future of AI

Similar Tutorials

Explainable AI and Interpretable machine learning are active areas of research. There are a number of tutorials that focus on different aspects of explainable AI, however to the best of our knowledge this is the first tutorial that focuses on Explainable models in AI that are inspired from physics. One of the tutors, Muhammad Aurangzeb Ahmad, has previously given a number of tutorials on Explainable AI in Healthcare and also on Visual Methods for Explainable AI. A list of these tutorials are as follows:

- Boris Kovalerchuk, Muhammad Aurangzeb Ahmad, Ankur Teredesai Deep Explanations in Machine Learning via Interpretable Visual Methods PAKDD 2020 Singapore June 11-16, 2020

- Muhammad Aurangzeb Ahmad, Carly Eckert, Ankur Teredesai, Vikas Kumar Interpretable Machine Learning: What Clinical Informaticists Need to Know Clinical Informatics Conference, AMIA Atlanta, GA April 30, 2019

- Ankur Teredesai, Dr. Carly Eckert M.D., Muhammad Aurangzeb Ahmad, Vikas Kumar Explainable Machine Learning Models for Healthcare AI September 26, 2018 ACM Seminar

- Muhammad Aurangzeb Ahmad, Dr. Carly Eckert M.D., Ankur Teredesai, Vikas Kumar Explainable Models for Healthcare AI KDD London, United Kingdom August 19-23, 2018

- Muhammad Aurangzeb Ahmad, Dr. Carly Eckert M.D., Ankur Teredesai, Vikas Kumar Interpretable Machine Learning in Healthcare International Conference on Bioinformatics, Computational Biology, and Health Informatics, BCB 2018, August 29 – September 01, 2018 Washington, DC, USA.

- Muhammad Aurangzeb Ahmad, Dr. Carly Eckert M.D., Ankur Teredesai, Vikas Kumar Interpretable Machine Learning in Healthcare 2018 IEEE International Conference on Healthcare Informatics 4-7 June, New York, NY, USA.

The current tutorial is a different tutorial from the aforementioned tutorials but it also builds upon the previous tutorials and the cross-disciplinary (Computer Science and Physics) collaboration between the tutors.

Tutor Bios

Muhammad Aurangzeb Ahmad is a Research Scientist and Principal Data Scientist at KenSci Inc. a Machine learning/AI healthcare informatics company focused on risk prediction in healthcare. He is also Affiliate Associate Professor in the Department of Computer Science at University of Washington Tacoma and has held academic positions at University of Minnesota, Indian Institute of Technology in Kanpur and Istinye University in Turkey. He has published more than 50 research papers in top machine learning and data mining conferences KDD, AAAI, SDM, PKDD etc. His current research is focused on Responsible AI that is explainable, bias-free, robust, ethical and value aligned with humans.

Muhammad Aurangzeb Ahmad is a Research Scientist and Principal Data Scientist at KenSci Inc. a Machine learning/AI healthcare informatics company focused on risk prediction in healthcare. He is also Affiliate Associate Professor in the Department of Computer Science at University of Washington Tacoma and has held academic positions at University of Minnesota, Indian Institute of Technology in Kanpur and Istinye University in Turkey. He has published more than 50 research papers in top machine learning and data mining conferences KDD, AAAI, SDM, PKDD etc. His current research is focused on Responsible AI that is explainable, bias-free, robust, ethical and value aligned with humans.

Sener Ozoner is an Associate Professor in the Faculty of Engineering at Istinye University in Istanbul, Turkey. He has a PhD in Physics from the University of Minnesota and was a post-doc at the Institute for Nuclear Theory at the University of Washington. His past work was on untangling complex correlations between strongly interacting subatomic particles in the CERN collider data. His current work centers around the concept of materials discovery, where he uses machine learning techniques to optimize time-consuming quantum many-body simulations, which are used to investigate the physical and chemical properties of materials.

Sener Ozoner is an Associate Professor in the Faculty of Engineering at Istinye University in Istanbul, Turkey. He has a PhD in Physics from the University of Minnesota and was a post-doc at the Institute for Nuclear Theory at the University of Washington. His past work was on untangling complex correlations between strongly interacting subatomic particles in the CERN collider data. His current work centers around the concept of materials discovery, where he uses machine learning techniques to optimize time-consuming quantum many-body simulations, which are used to investigate the physical and chemical properties of materials.

References:

- Aubin, B., A. Maillard, F. Krzakala, N. Macris, L. Zdeborová, et al. (2018), in Advances in Neural Information Processing Systems, pp. 3223–3234, preprint arXiv:1806.05451.

- Bény, Cédric. “Deep learning and the renormalization group.” arXiv preprint arXiv:1301.3124 (2013).

- Carleo, Giuseppe, Ignacio Cirac, Kyle Cranmer, Laurent Daudet, Maria Schuld, Naftali Tishby, Leslie Vogt-Maranto, and Lenka Zdeborová. “Machine learning and the physical sciences.” Reviews of Modern Physics 91, no. 4 (2019): 045002.

- Decelle, Aurelien, Florent Krzakala, Cristopher Moore, and Lenka Zdeborová. “Asymptotic analysis of the stochastic block model for modular networks and its algorithmic applications.” Physical Review E 84, no. 6 (2011): 066106.

- Doersch, Carl. “Tutorial on variational autoencoders.” arXiv preprint arXiv:1606.05908 (2016).

- Eaton, Eric, and Rachael Mansbach. “A spin-glass model for semi-supervised community detection.” In Twenty-Sixth AAAI Conference on Artificial Intelligence. 2012.

- Engel, Andreas, and Christian Van den Broeck. Statistical mechanics of learning. Cambridge University Press, 2001.

- Fortunato, Santo. “Community detection in graphs.” Physics reports 486, no. 3-5 (2010): 75-174.

- Goldt, Sebastian, Madhu Advani, Andrew M. Saxe, Florent Krzakala, and Lenka Zdeborová. “Dynamics of stochastic gradient descent for two-layer neural networks in the teacher-student setup.” In Advances in Neural Information Processing Systems, pp. 6979-6989. 2019.

- Karpatne, Anuj, William Watkins, Jordan Read, and Vipin Kumar. “Physics-guided neural networks [pgnn]: An application in lake temperature modeling.” arXiv preprint arXiv:1710.11431 [2017].

- Kingma, Diederik P., and Max Welling. “Auto-encoding variational bayes.” arXiv preprint arXiv:1312.6114 (2013).

- Krzakala, Florent, Cristopher Moore, Elchanan Mossel, Joe Neeman, Allan Sly, Lenka Zdeborová, and Pan Zhang. “Spectral redemption in clustering sparse networks.” Proceedings of the National Academy of Sciences 110, no. 52 (2013): 20935-20940.

- Li, Chunyuan, Changyou Chen, David Carlson, and Lawrence Carin. “Preconditioned stochastic gradient Langevin dynamics for deep neural networks.” In Thirtieth AAAI Conference on Artificial Intelligence. 2016.

- Mehta, Pankaj, and David J. Schwab. “An exact mapping between the variational renormalization group and deep learning.” arXiv preprint arXiv:1410.3831 (2014).

- Molnar, Christoph. Interpretable machine learning. Lulu. com, 2019.

- Nguyen, H. Chau, Riccardo Zecchina, and Johannes Berg. “Inverse statistical problems: from the inverse Ising problem to data science.” Advances in Physics 66, no. 3 (2017): 197-261.

- Shwartz-Ziv, Ravid, and Naftali Tishby. “Opening the black box of deep neural networks via information.” arXiv preprint arXiv:1703.00810 (2017).

- Tishby, Naftali, Fernando C. Pereira, and William Bialek. “The information bottleneck method.” arXiv preprint physics/0004057 (2000).

- Tishby, Naftali, and Noga Zaslavsky. “Deep learning and the information bottleneck principle.” In 2015 IEEE Information Theory Workshop (ITW), pp. 1-5. IEEE, 2015.

- Tubiana, Jérôme, Simona Cocco, and Rémi Monasson. “Learning protein constitutive motifs from sequence data.” Elife 8 (2019): e39397.

- Schmidt, Michael, and Hod Lipson. “Distilling free-form natural laws from experimental data.” science 324, no. 5923 [2009]: 81-85.

- Udrescu, Silviu-Marian, and Max Tegmark. “Ai feynman: a physics-inspired method for symbolic regression.” arXiv preprint arXiv:1905.11481 [2019].

- Valiant, Leslie G. “A theory of the learnable.” Communications of the ACM 27, no. 11 (1984): 1134-1142.

- Wang, Tong, Cynthia Rudin, Finale Velez-Doshi, Yimin Liu, Erica Klampfl, and Perry MacNeille. “Bayesian rule sets for interpretable classification.” In 2016 IEEE 16th International Conference on Data Mining (ICDM), pp. 1269-1274. IEEE, 2016.

- Wang, Chuang, Hong Hu, and Yue Lu. “A Solvable High-Dimensional Model of GAN.” In Advances in Neural Information Processing Systems, pp. 13759-13768. 2019.